Leonie posted this

post with fabulous links and I'm cross-posting due to the importance of Fred Smith's work.

The GEM high stakes testing committee, which Fred has worked with, has been working on supporting parents who are willing to do a test case in NY State, one of the only states without a policy) by opting out of the test (do their kids get left back?). Even within the anti-hst community there is some controversy as to whether pushing a program of opting out is worthwhile at this point (if high scoring kids opt out the school rating goes down) but there will be a push forward.

From Leonie Haimson: Feb 22, 2012

http://nycpublicschoolparents.blogspot.com/2012/02/testing-expert-points-out-severe-flaws.html

Testing expert points out severe flaws in NYS exams and urges parents to boycott them this spring!

Many recent columns have pointed out the fundamental flaws in the new NY teacher evaluation system in the last few days: by Aaron Pallas of Columbia University; Carol Burris, Long Island principal, education historian Diane Ravitch, (who has written not one but two excellent critiques) and Juan Gonzalez,

investigative reporter for the Daily News. All point out that despite

the claim that the new evaluation system is supposed to be based only

20-40% on state exams, test scores in fact will trump all, since any

teacher rated "ineffective" on their students' standardized exams will

be rated "ineffective" overall.

To add insult to injury, the NYC Department of Education is expected

to release the teacher data reports to the media tomorrow -- with the

names of individual teachers attached. These reports

are based SOLELY on the change in student test scores of individual

teachers, filtered through a complicated formula that is supposed to

control for factors out of their control, which is essentially

impossible to do. Moreover, there are huge margins of error that mean a teacher with a high rating one year is often rated extremely low the next. Sign our petition now,

if you haven't yet, urging the papers not to publish these reports; and

read the outraged comments of parents, teachers, principals and

researchers, pointing out how unreliable these reports are as an

indication of teacher quality.

Though most of the critiques so far focus on the inherently volatile

nature and large margins of error in any such calculation, here in NY

State we have a special problem: the state tests themselves have been

fatally flawed for many years. There has been rampant test score inflation over the past decade; many of the test questions themselves are amazingly dumb and ambiguous;

and there are other severe problems with the scaling and the design of

these exams that only testing experts fully understand. Though the

State Education Department claims to have now solved these problems, few

actually believe this to be the case.

As further evidence, see Fred Smith's analysis below. Fred is a

retired assessment expert for the NYC Board of Education, who has written widely on the fundamental flaws in the state tests.

Here, he shows how deep problems remain in their design and execution

-- making their results, and the new teacher evaluation system and

teacher data reports based upon them, essentially worthless. He goes on

to urge parents to boycott the state exams this spring. Please leave a

comment about whether you would consider keeping your child out of

school for this purpose!

Fred Smith:

New

York State’s Testing Program (NYSTP) has relied on a series of deeply

flawed exams given to 1.2 million students a year. This conclusion is

supported by comparing English Language Arts (ELA) and Math data from

2006 to 2011 with National Assessment of Educational Progress (NAEP)

data, but not in the usual way.

Rather

than ponder discrepancies in performance and growth on the national

versus state exams, I analyzed the items—the building blocks of the NAEP

and NYSTP that underlie their reported results.

NAEP

samples fourth and eighth grade students in reading and math every two

years. Achievement and improvement trends are studied nationwide and

broken down state-by-state. The results spur biennial debate over two

questions:

Why

are results obtained on state-imposed exams far higher than proficiency

as measured by NAEP? Why do scores from state tests increase so much,

while NAEP shows meager changes over time?

By

concentrating on results—counting how many students have passed state

standards; or trying to gauge the achievement gap; or devising

complicated value-added teacher evaluation models, school grading

systems and other multi-variate formulas—attention has been diverted

from the instruments themselves.

In

2009, the year statewide scores peaked, Regents Chancellor Merryl Tisch

discredited NYSTP’s implausibly high achievement levels and low

standards. She pledged reform—“more rigorous testing” became the catch

phrase. She knew that New York’s yearly pursuit and celebratory

announcements of escalating numbers belied a state of educational

decline.

It

was an admission of how insidious the results had been, not to mention

the precarious judgments that rested on them. Unsustainable outcomes

became a crisis. But Albany refuses to address the core problem.

The

NAEP and NYSTP exams contain multiple-choice and open-ended items. The

latter tap a higher order of knowledge. They ask students to interpret

reading material and provide a written response or to work out math

problems and show how they solved them, not just select or guess a right

answer.

Ergo,

on well-developed tests students will likely get a higher percentage of

correct answers on machine-scored multiple-choice questions. In

addition, the same students should do well, average or poorly on both

types of items.

NAEP

meets the dual expectations of order and correlation between its two

sets of items; the NYSTP exams, which are given in grades three through

eight, do not. Here are the contrasting pictures for the fourth grade

math test.

NAEP’s

multiple-choice items yield averages that are substantially higher than

its open-ended ones. The distance between them is consistent over

time—another way of saying that the averages run along parallel lines.

There is an obvious smoothness to the data.

Items

on the NYSTP exams defy such rhyme and reason. The percent correct on

each set of items goes up one year and down the next in a choppy

manner. In 2008, performance on the teacher-scored open-ended items

exceeded the level reached on the multiple-choice items. In 2011, when

the tests were supposed to have gained rigor, the open-ended math

questions were 26.2% easier than the NAEP’s.

The

reversals reveal exams made of items working at cross purposes,

generating data that go north and south at the same time. That’s the

kind of compass the test publisher, CTB/McGraw-Hill, has sold to the

State since 2006 to the tune of $48 million.

I

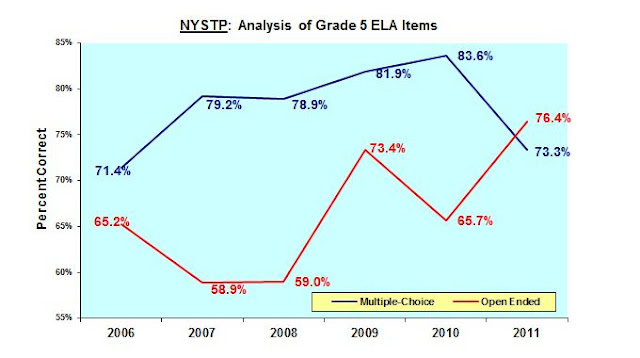

found incongruities in all grades measured by NYSTP. On the ELA,

divergent outcomes on the two types of items are noteworthy for grades

5, 6 and 7 in 2010 and 2011. The fifth grade items provide a jarring

illustration, because they continue to function incoherently in the

years of promised reform. Averages on the open-ended items increase (by

10.7%), as sharply as the multiple-choice averages fall (10.3%)—crossing

over them last year.

It

all goes unnoticed. Press releases are written in terms of overall

results without acknowledging or treating NYSTP’s separate parts. This

is odd since so much time and money go into administering and scoring

the more challenging, higher-level open-ended items.

So, we’ve had a program that has made a mockery out of accountability, with the head of the Regents running interference for it.

Parents watch helplessly as their children’s schools become testing

centers. And the quality of teachers is weighed on scales that are out

of balance, as Governor Cuomo takes a bow for leveraging an evaluation

system that depends on state test results to determine if a teacher is

effective.

If

the test numbers aren’t good enough, there’s no way teachers can

compensate by demonstrating other strengths needed to foster learning

and growth. Within days it is likely that newspapers will publish the

names of teachers and the grades they’ve received based on their

students’ test scores going back three years. As shown, however, these

results are derived from tests that fail to make sense.

The graphs are prima facie evidence that the vendor and Albany have delivered a defective product. High-stakes

decisions about students, teachers and schools have depended on it. An

independent investigation of NYSTP is imperative to determine what

happened and, if warranted, to seek recovery of damages.

It

is also time for the victims—parents in defense of their children, and

teachers in support of students, parents and their own self-interest—to

band together and just say “No!” to this April’s six days of testing.

---Fred

Smith, a retired Board of Education senior analyst, worked for the city

public school system in test research and development.